An investigation into on-line little one security reveals how TikTok repeatedly pushed movies fuelling emotions of melancholy and anger, together with content material impressed by Andrew Tate, unprompted to a 13-year-old

13-year-olds are being bombarded with “extremely dangerous” psychological well being content material on social media together with movies that consultants imagine could lead on younger youngsters to melancholy or suicide.

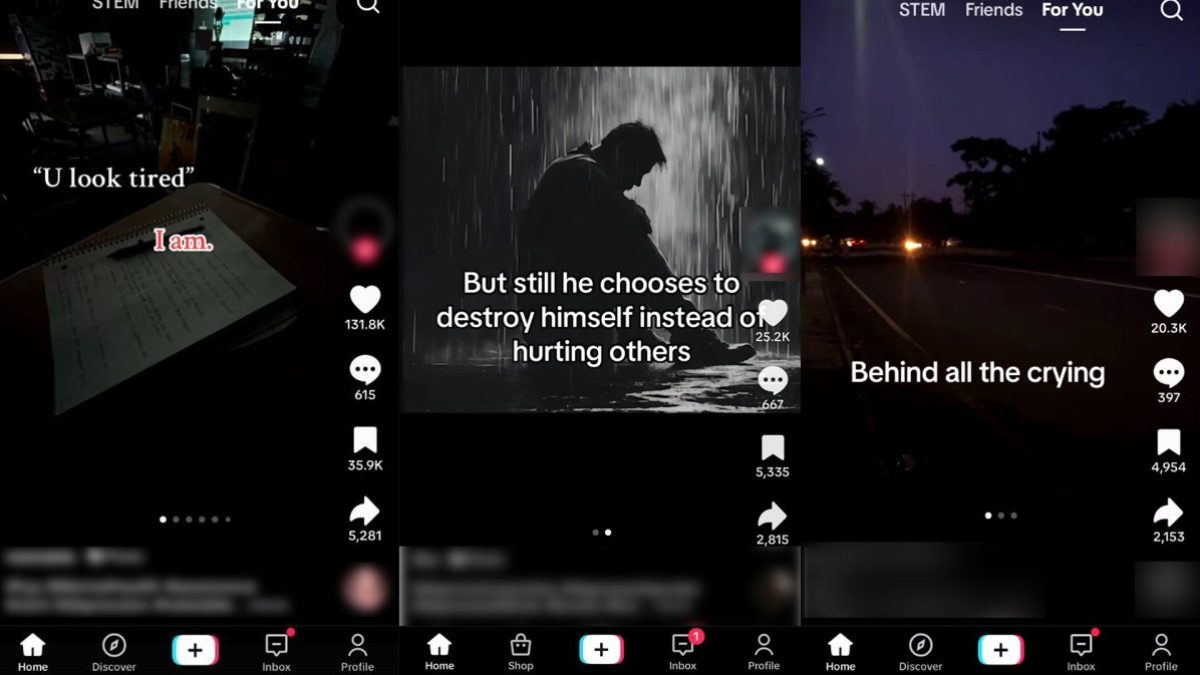

An investigation by The i Paper into social media content material pushed to kids arrange a fictional TikTok account for a typical 13-year previous boy and located inside minutes the account confronted a barrage of disturbing movies questioning his psychological state.

With out trying to find any details about psychological well being points, in the middle of 45 minutes, the account was pushed a variety of probably harmful content material at a fee of as soon as each two minutes.

Our investigation found:

- The account was inundated with movies about feeling depressed or lonely, together with references to suicide;

- The primary such clip speaking about melancholy was proven after lower than 90 seconds of him being on the app;

- Seven movies that includes melancholy have been proven in lower than 45 minutes, understanding as one each six minutes;

- Aggressive “motivational” movies popularised by controversial influencer Andrew Tate have been additionally repeatedly pushed;

- Twelve “poisonous” masculinity movies have been proven in 45 minutes selling the significance of hiding feelings and as a substitute constructing bodily energy.

The findings come as a part of a wider investigation into little one on-line security, that additionally discovered the Instagram account of a fictional 13-year-old woman was pushed over-simplified movies about having ADHD and autism. Psychologists concern some kids who watched this content material would falsely imagine they’ve these complicated circumstances, inflicting misery and nervousness.

The revelations which recommend different teen accounts may have been equally focused, have prompted calls from MPs and campaigners for social media firms to behave urgently and strengthen the extent of restrictions on kids’s accounts.

Specialists imagine the TikTok algorithm repeatedly pushed movies selling melancholy to the account of a 13-year-old boy as a result of its information suggests younger boys usually tend to have interaction with this content material or search it out. TikTok, like all social media platforms, desires to extend potential promoting income by getting customers to remain on the app so long as doable.

Helen Hayes MP, Chair of the Home of Commons’ Training Committee, mentioned: “The damning proof revealed by this investigation exhibits how the preferred social media platforms proceed to direct content material to kids that’s presently authorized but dangerous, extremely addictive or which might unfold misinformation to kids about their very own well being and well-being.”

The daddy of Molly Russell, a 14-year-old woman who died viewing dangerous content material on-line, wrote to Prime Minister Keir Starmer final weekend warning the UK is “going backwards” on on-line security.

Ian Russell mentioned: “The streams of life-sucking content material seen by kids will quickly change into torrents: a digital catastrophe”.

Russell is the chair of the Molly Rose Basis, which together with two main psychologists considered the movies proven to the fictional 13-year-olds created by this paper.

They mentioned the proof uncovered raised critical issues and known as on social media firms to make sure all youngsters’ accounts are mechanically set to essentially the most restricted degree of content material which ought to assist stop dangerous movies being served to kids.

In the mean time it’s as much as {the teenager} or their mother and father to show these settings on, aside from on Instagram which is the one app the place that is performed mechanically.

Specifically, they criticised firms’ algorithms repeatedly pushing doubtlessly dangerous movies to teen customers and known as for particular person posts on matters similar to melancholy to come back with signposts for assist.

‘You don’t wish to be right here no extra’: the movies pushed to younger boys

Harry, our fictional 13-year-old, was proven a sombre video of a college train ebook on a desk with a softly-spoken feminine voice over saying: “Despair could be invisible”.

She continues: “It’s going to work or faculty and excelling at all the things you do however falling aside once you’re again residence. It’s being essentially the most entertaining particular person within the room whilst you’re feeling empty inside.”

This video, just like the others, had no signposts in direction of sources for assist.

Different posts depicted a male voices screaming about being a failure. One cried he wished to kill himself, saying: “Are you aware how arduous it’s simply to f**king inform somebody… you don’t wish to be right here no extra?”.

One TikTok person requested viewers to dramatise their emotions and relationship with somebody who precipitated them upset, writing: “If you happen to wrote a ebook about the one that damage you essentially the most, what would the primary sentence be?”.

A fifth video performed emotive music whereas displaying a message stating, “I really feel as if I’m nothing… I’m drained, I’m misplaced, I’m battling life”.

It goes on: “I’m by no means blissful, I all the time simply faux it. I’m all the time annoying folks, disappointing folks, making errors, I really feel like there’s one thing mistaken with me.”

The chief govt of the Molly Rose Basis, Andy Burrows, mentioned: “When considered in fast succession this content material could be extremely dangerous, notably for youngsters who could also be battling poor psychological well being for whom it may well reinforce unfavourable emotions and rumination round self-worth and hopelessness.”

He added: “When setting out their little one security duties the regulator Ofcom should take into account how algorithmically-suggested content material can kind a poisonous cocktail when beneficial collectively and take steps to compel firms to sort out this regarding downside.”

In response to The i Paper’s investigation, Ofcom, which can quickly tackle powers to high quality social media firms in the event that they breach new authorized safeguards underneath the On-line Security Act, criticised social media platforms for utilizing algorithms to push such content material.

A spokesperson for Ofcom mentioned “algorithms are a significant pathway to hurt on-line… We count on firms to be absolutely ready to satisfy their new little one security duties after they are available in to power”.

Many individuals flip to social media for assist with their psychological well being. Nevertheless, the movies pushed to the fictional teen boy’s profile on this investigation are doubtlessly dangerous as they amplified emotions of disappointment and didn’t direct viewers to sources of assist.

Dr Nihara Krause, who has created various psychological well being apps and works with Ofcom, mentioned the influence on an adolescent repeatedly seeing these movies is to be taken critically.

“If you happen to’ve bought one thing developing with the frequency of each six minutes and also you’re in a really susceptible way of thinking… then that may all be fairly inviting to an adolescent in a really horrific manner,” she mentioned.

This investigation comes after an Ofcom report discovered 22 per cent of eight- to 17-year-olds lie that they’re 18 or over on social media apps and subsequently are evading makes an attempt by these apps to point out age-appropriate content material.

However The i Paper’s report exhibits even when an adolescent logs on with a toddler’s account, they’re being pushed dangerous and inappropriate content material, even with out the younger particular person searching for these movies out.

TikTok have been despatched hyperlinks to the dangerous content material pushed to the fictional boy’s account and eliminated a few of the content material which it agreed had violated its safeguarding guidelines.

A spokesperson for TikTok mentioned: “TikTok has industry-leading security settings for teenagers, together with techniques that block content material that is probably not appropriate for them, a default 60-minute each day display screen time restrict and household pairing instruments that folks can use to set extra content material restrictions.”

Instagram didn’t remark however lately launched “Teen Accounts” that are marketed as having built-in protections for youngsters.

A Authorities spokesperson mentioned: “Kids should be protected on-line. Over the approaching months the On-line Security Act will herald place robust protections for kids and maintain social media firms accountable for the protection of their customers. Platforms should take motion by legislation, together with putting in extremely efficient age checks and altering their algorithms to filter out dangerous content material so kids can have protected experiences on their websites.

Anybody feeling emotionally distressed or suicidal can name Samaritans for assistance on 116 123 or e-mail jo@samaritans.org within the UK.

How we carried out the investigation

The i Paper used a factory-reset telephone and arrange a brand new e-mail deal with and social media accounts for the fictional kids used within the experiment.

Their ages have been set to 13 within the apps, on the handset and when establishing the e-mail deal with created for them. You should be a minimum of 13 to make an account on these apps.

Neither the account nor the handset have been used to hunt out any content material.

Content material pushed to the account was watched or skipped relying on whether or not it could attraction to a median 13-year-old. This was partly knowledgeable by Ofcom analysis into what on-line content material is widespread amongst kids of this age vary damaged down by class.

A complete of 45 minutes was spent on TikTok and Instagram throughout a sequence of shorter periods at occasions a toddler would use them, similar to after faculty or on the weekend.

The periods have been all display screen recorded and logged right into a database, with additional investigation into accounts, related hashtags and related movies carried out after the preliminary investigation was concluded.